|

| (Textbook. Click for information.) |

Analysis of Algorithms

Spring 2012

Recitation 13

Final Exam Review

Partial Answers

|

CS 3343/3341 Analysis of Algorithms Spring 2012 Recitation 13 Final Exam Review Partial Answers |

|

| |||||||||||||||||||||||||||||||||||||||||||||||||

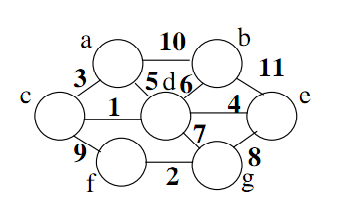

| Action | Contents of Piority Queue (* = removed) | ||||||

|---|---|---|---|---|---|---|---|

| Remove & Relax | a | b | c | d | e | f | g |

| Initial | 0 | ∞ | ∞ | ∞ | ∞ | ∞ | ∞ |

| a | 0* | 10 | 3 | 5 | ∞ | ∞ | ∞ |

| c | 0* | 10 | 3* | 4 | ∞ | 12 | ∞ |

| d | 0* | 10 | 3* | 4* | 8 | 12 | 11 |

| e | 0* | 10 | 3* | 4* | 8* | 12 | 11 |

| b | 0* | 10* | 3* | 4* | 8* | 12 | 11 |

| g | 0* | 10* | 3* | 4* | 8* | 12 | 11* |

| f | 0* | 10* | 3* | 4* | 8* | 12* | 11* |

|

Subset-Sum Problem (SS): Start with a set A of positive integers and a desired sum S. The decision question: Is there a subset A' of A such that sum( a | a in A' ) = S? |

|

Partition Problem (PART): Start with a set A of positive integers. The decision question: Is there a subset A' of A such that sum( a | a in A' ) = sum( a | a in A - A' )? [Here A - A' is the complement of A' in A.] |

|

| ||||||||||||||||||||||||||||||||||||||||||